In the beginning in order to count we used, as we still do, our fingers, and sometimes our toes. Not only are they conveniently arranged according to the prime divisors of their sum (2 and 5 multiplied make 10, and no other primes less than 10 divide evenly therein), but we can also fold them up and down according to our needs, so allowing a primitive but very effective memory aid.

In more recent times the abacus was was the paradigm of calculation. It was efficient, communicable, and easily learned, mainly because it is like having many more fingers we can fold up and down.

Chinese Abacus.

In the ‘good old days’ of early electro-magnetic computation we programmed directly onto the computer via switches. Think of an abacus with an automatic left-alignment capability. We still need to know how to use an abacus but we can mechanically automate left-alignment. Things progressed to abstractions such as punch-cards, which could be prepared in one’s own time and then inputed into the computer to perform the calculations.

Punch-card.

As you can see there are similarities between punch-cards and an abacus. They both use a columnar layout, they are of limited scope, and both require familiarity with arithmetic. Already we can also see the increase in complexity, both in terms of the density of the information on display and the amount of meta-information used.

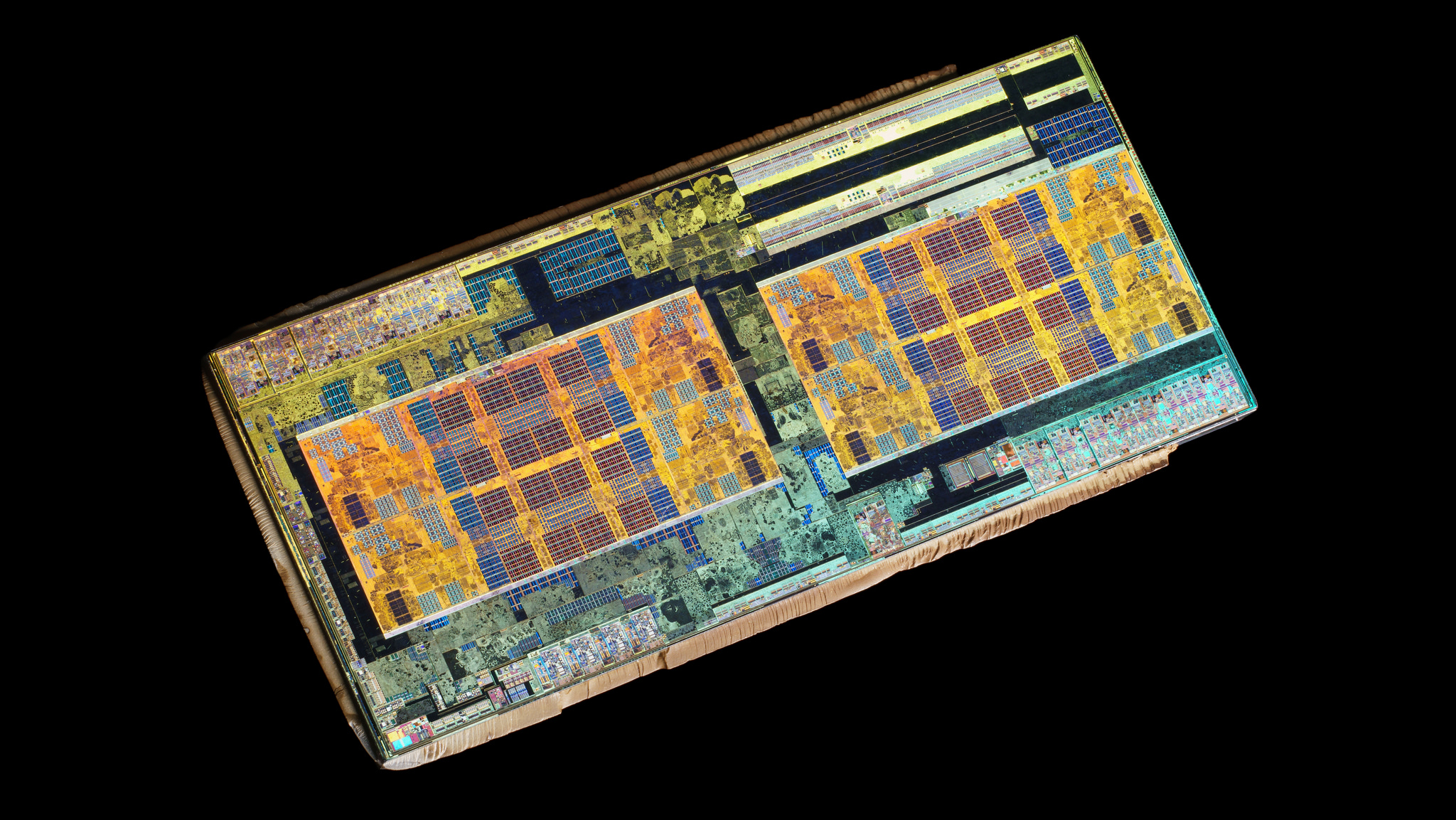

Nowadays we have the X86-64 architecture. Good luck with that.

Modern microchip.

And yet, even though these devices are so tiny and complicated that they operate near the limits of measurability itself, to such an extent that the designers have to worry about electrons jumping from one adjacent wire to another and hence spoiling everything, we don’t need luck to make them work. We use abstractions!

We encode these abstractions in software. The first recognised programme was designed by Ada Lovelace, who worked with Babbage on the Analytical Engine, and calculated Bernoulli Numbers. Ever since we have been working to increase both the power and clarity of our ability to communicate our calculable ideas both to computers, and to other humans.

As a brief digression, programming is at least as much about sharing thought with other people as it is with computers. A good piece of software not only runs efficiently on whatever the hardware requires; it is also easily understood by other programmers so that when, not if, it needs to be altered to fix errors, or extend its functionality, this can happen with a minimum of stress.

So why IS software complicated? Some of the reasons are:

- Hardware gets more complicated and so the requirements to programme them becomes more complicated.

- The ecosystem gets more complicated as we create more and more general libraries each of which specialises in one particular competence (for example numerical calculations).

- New techniques are developed all the time, normally coming from academia, especially the field of Pure Mathematics.

- We demand ever more functionality from our computers, such as real-time communication, or fancy graphics.

- We keep on adding leaky abstractions.

As seen above, computer hardware is becoming more and more complicated as the years go by, and this rate of increasing complexity is well described by one of the most famous heuristics in the industry: Moore’s Law which states: ‘the number of transistors in dense integrated circuits doubles every eighteen months’. This has held true for over sixty years, though we are coming up against hard quantum mechanical limits now. While this means we can perform more calculations, faster, than ever before, it also means the hardware is becoming more complicated, and so the software needed to manage the hardware must keep pace.

A contemporary operating system is typically composed of many millions of lines of code, broken into many different parts. Typically an operating system comprises a kernel that interfaces directly with the hardware, and many libraries that specialise in tasks such as networking or the graphical user interface, as well as the programs most users need, such as word processors, games, and web browsers.

In Mathematics itself there is a more than two-thousand year quest to define and guarantee the correctness of the subject itself, and quite apart from every novelty of efficient computation, the foundations themselves have undergone radical development in recent years. One hundred years ago Set Theory was introduced and has served well, if trickily, ever since. The trickiness involved, as well the seeming vagueness of some of the underlying assumptions, further led some, especially L. E. J. Brouwer, to try to reformulate Mathematics on an ‘intuitionistic’ basis. This in turn led to the recent Univalent Foundations and Homotopy Type Theory (HoTT). HoTT, in particular, shows considerable promise in allowing us to reason with great abstraction and powerful correctness on the theory and practice of programming.

The face of a man who looked upon infinity and saw only potential.

Our demands for greater ‘power’ impose constraints that can only be met with greater complexity. Without going into great detail, as we move from the relatively simple one-to-one, client-server computational architecture to a fully distributed computation model, as is ubiquitous in Nature (the speed of light guarantees a locality of computation), so we are more and more reliant on the subtle and intriguing theories such as Paxos, which no matter one’s expertise is still not simple. Developments in this area, from the use of bunches of graphics cards for statistical modeling, to the growth of secure data storage systems, tend to be significant both in terms of novelty and difficulty.

Abstractions, especially as implemented by the congenitally lazy programmer, tend to reveal too many of the underlying assumptions, and hence ‘leak’ complexity both up and down our level of abstracion, though mainly up. These leaks then require us to stick our fingers in the complexity dyke, and no matter how many fingers we may abstract, the water of complexity will tend to flow downwards, around and past and through our ability to count. (Reality is not necessarily countable.)

So what can we do about it? The answer is simple, we can provide simplicity through abstraction.

Let me give you an example. When we are young and learning to count we learn first to count to ten, using our fingers. Each count has its own term, one, two, three, etc.. Then we learn how to count to twenty, and the terms associated. The thirties follow, and the forties, and patterns begin to form. Then, like a piece of magic, we learn how number can be represented in table.

Having constructed a table of the first hundred number we can not only extend this to the first one thousand, but also to the first million, as befits our patience. More importantly we can abstract over the pattern, and use this pattern in its most general sense, allowing each entry of the first one hundred to refer to a table of one hundred, thereby giving a table of tables, of numerical size 10,000, or as the Greeks would have it, a myriad. So we abstract, for we are ‘outside the area contained within the lines drawn’.

Of course, for this to work, we need to know and understand the complexities, we need to measure them, and abstract over them, and most of all we need to learn Mathematics, for it is the language of abstraction.

Huge efforts have been made in this field, and are ongoing. Coq is a fabulous development, as is Agda, and Haskell and Rust are becoming mainstream. In Mathematics itself there is still roiling debate and vigorous argument about the nature and validity of abstraction, both pure and applied. The conversations around these topics leach into Computer Science of course, but also Philosophy, Law, Economics and even Political Science.

Programming is like a mixture of Poetry and Mathematics. It has all the rigour of Poetry and all the interpretability of Mathematics.

You want to know the secret of success in this field, as in so many others?

Play. Look at children learn. From repetition of simple tasks that adults find mind-numbingly boring children learn the abstractions that give them all their languages, all their games, all their mastery over themselves and other things. So it is with mathematicians, they play with numbers and their patterns. So it is with programmers, computers are the toys with which they learn the abstractions to understand things simply, but no simpler.

Make like a child, be simple, practice, and understand. This is the simplicity of software.

The featured image, taken by John McSporran which shows up in searches of complexity with reuse rights, is aptly entitled ‘complexity’. Though not a picture of software it is undeniably complicated, and also beautiful, and a good deal more intuitively so than any map of dependency graphs. It also, perhaps amusingly, evokes Ted Stevens’ series of tubes.

Eoin Tierney is the Science Editor of Cassandra Voices.